There was (and probably will be for a while) a bit of interest for the challenge initially posted for Java, yet it turned out to be an interesting task and spread all over. The One Billion Rows Challenge. Here - gunnarmorling/1brc. I have heard about it around a month ago, and added it to a “some day” list. Now I finally tried myself in it.

Short on a challenge itself. In essence, it is easy task - read rows of well-formatted data line by line and calculate a few measurements, that’s like beginning of programming tasks. But with that misleading simplicity comes a nuance - there are 1 billion of lines to process, and make it as fast as you can. When you have something measured in billions, the complexity quickly goes into the outer space. A bit of math: with every nanosecond of slow down on a line processing program takes a second longer. How often do you think about the program performance in terms of nanoseconds?

Speaking of me, I have a little experience with optimisations to such level. I think about the code in terms of its performance with every task, but there is rarely a need to process such large collections of data in one take and fast. They also costly in time, so premature optimisation has never been good, just reasonably fast is good enought. Given that, my knowledge of data structures I’m going to use, file reading, memory access and other things were the only one who helped in solving that task.

I didn’t captured all the steps like from the most naive implementation to the best I have reached, but most of that. The most unoptimised version I came up initially by reading line by line should took around 30 to 40 minutes to complete, and that’s an approximation, because faster implementation could be written in half of that time.

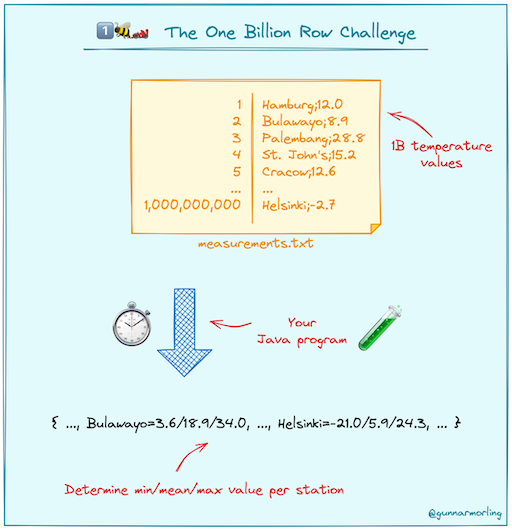

Recap: The Challenge

The challenge is to read a file with a billion rows, each row containing a city name and a temperature. You need to calculate min, max and average temperature for each city. The file is well-formatted, so we can assume that each line contains a city name and a temperature (with only one fraction digit) separated by a semicolon. The file is around 13GB in size. The output should be sorted by city name. The challenge is to make it as fast as possible. Here is a nice illustration from the original repo:

Replacing “Java” everywhere with “Swift”, we are taking off now.

Test conditions

Most of the time I’ve been testing on my MacBook M1 without power plugged in. Despite the fact I do not have any power-save

modes turned on, Apple is decreasing CPU performance slightly. So when I’ve started running timings on plugged in laptop,

I have had a speed up around 0.8s to every test. For the first implementations it was insignificant difference, but as

running time decrease it gives a visible change. The numbers I am presenting here has been measured using hyperfine tool

with only terminal running (and bunch of staff macOS running in background), plugged in to a power, MacBook M1 Pro with

16GB of memory and macOS Sonoma 14.4.

Take one: Not so naive

Skipping aside lost naive version, let’s think about obvious and easy to do facts for the start. For example, how are we going to represent data once it has been parsed? We need to calculate min, max and average. First two can be calculated at the time of reading, for average we need all values. In the most naive version it would be an array of these value, but that is a waste of time and space. To calculate average we only need a sum and a count, just two numbers:

struct Measurement {

let min: Double

let max: Double

let avg: Double

let count: Int

}

Right from the start we can also make one more improvement: parsing doubles. That a much more complex task than parsing integer and perform operations on it. We know that all the numbers in the input has exactly one digit in the decimal part, so we can work with integers most of the time, converting them into doubles only in the final steps:

struct Measurement {

let name: String

let min: Int

let max: Int

let avg: Int

let count: Int

}

All the file needs to be loaded into a memory then. Instead of friction between file system and memory, we load it in a Data to

have faster access to it. System may decide to store that in a swap, but it is anyway faster that reading from the file.

We store results in a dictionary Dictionary<Int, Measurement>. Key is not a String, because use of a city name as

a key is not efficient for two reasons. First, you need to parse the name into a string (or at least bytes buffer,

yet still not effective). Second, default hashing is not so fast and will reiterate over the name more likely.

To solve that, we can compute hash while we parse the name. Then we won’t need to convert name to a string each time,

but only the first one. I only later realized that parsing bytes into a string could be done much later, but if you

think about it - there is not a lot of difference. We know that there are only 413 different stations, and more demanding

version has 10K of them, yet neither of this will make a significant change here due to reading it only one time.

Finally, it is important to allocate dictionary with capacity beforehand. We know how much stations are there, so we can use it to benefit by reducing allocations as we add elements to a dictionary, so we allocate each dictionary with initial capacity of 500 keys:

var result = Dictionary<Int, Measurement>(minimumCapacity: 500)

You can check the whole code (a bit messy though) at this commit.

That gives us running time around 2 minutes 45 seconds. Not bad for such simple thing we’ve done and reading file line by line.

CPU and chunks

We are clearly not using the full potential of the modern computers with our implementation. For example, my MacBook Pro M1 has 10 cores, and we are using only 1 for processing 1 line at the time. Let’s change this.

To parallelise processing effectively, we need to split a whole file into chunks, so they could be processed independetly. We don’t know yet how many chunks will give us the best performace, so let’s make number of chunks a configuarable parameter and play with it later.

let chunkCount = 10 // setting to number of cores for start

let chunkSize = fileSize / chunksCount

Currently, we are going to load 1.3GB of data for each chunk and process it on one of the cores. Now, the lines in the

file has different lengths and each chunk is more likely to end on an arbitrary position in the line, more likely to be

somewhere in the middle. But we need to have clear line boundaries, so using chunkSize as starting point we are going

to adjust it to be exactly till the end of line:

var offset = 0

func nextChar() -> UInt8 {

// read char from file handle and update offset

}

while offset <= maxOffset {

let chunkStart = offset

offset += chunkSize

// 10 is ASCII code for new line

while offset <= maxOffset && currChar != 10 {

currChar = nextChar()

}

// restore boundary if needed

if offset > maxOffset {

offset = maxOffset

}

let currChunkSize = offset - chunkStart

process(start: chunkStart, size: currChunkSize)

// read char after new line

currChar = nextChar()

}

The code above reads till the end of a line after we skipped to the end of a chunk, which we can consider to be extremely

small amount of work - line is a city name (presumably around 40 characters max) and temperature (up to 5 chars in total),

so in worst case we have to run inner while loop ~45 times, and with 10 chunks it is 450 for the worst case scenario. If

we will increase number of chunks significatly, e.g. to a few thousands, it will take 45 * 2000 = 90_000 iterations. That

is a still a small amount of time (~0.05 seconds), which could be a subject for optimisation if nothing else left to optimise,

but we can consider this as irrelative, since in real case it is more likely to by around half of that time anyway.

To run processing of chunks we are going to use new Swift Concurrency capabilities and task group. The implementation is already has taken care of not scheduling too much tasks avoiding thread explosion, still we have to be mindfull of chunks being not too small. The decompose it even further, we are going to introduce two actors: one for reading a chunk of data, second for parsing it into a dictiorary as partial result.

typealias PartialResult = [Int64: Measurement]

while offset <= maxOffset {

// ...

}

let result = await withTaskGroup(of: PartialResult.self) { group in

for chunk in chunks {

group.addTask {

let reader = ChunkReader(fd: fd)

let data = await reader.run(start: chunkStart, size: currChunkSize)

let parser = LineParser()

let partialResult = await parser.run(data: data)

return partialResult

}

}

var result = PartialResult(minimumCapacity: 500)

for await partial in group {

merge(partial, into: result)

}

return result

}

actor ChunkReader {

private let fd: FileDescriptor

init(fd: FileDescriptor) {

self.fd = fd

}

func run(start: Int, size: Int) -> Data {

// read raw data

}

}

actor LineParser {

func run(data: Data) -> PartialResult {

// parse lines from the loaded data

}

}

This will allow us to utilise CPU at max by constantly running reading or parsing, without blocking. Actors there aren’t actually protecting any shared state, but act as isolation regions for running tasks.

We also need to adjust our Measurement structure to contain Data for name instead of conversion to a string. We will

only convert it to a string when the result needs to be displayed.

struct Measurement {

let name: Data

// ...

}

As a result, after some play with chunks number, by using 1024 chunks, the time has been reduced drastically to ~25 seconds. The implementation is available here.

That is a good result so far, but we can do better, there is still a room for improvement.

Immediate scheduling and more effective file reading

We create group to run tasks after chunks has been collected. And chunk are collected sequentially, meanining we are loosing valuable time waiting for them all to be defined first. Instead, we are going to put scanning for chunks as part of a task group:

let result = await withTaskGroup(of: PartialResult.self) { group in

while offset <= maxOffset {

// ...

group.addTask {

// ...

}

}

}

That isn’t going to get us a lot of speed up, but still we are using resources more consciously.

The file reading can be improved as well. At the time we were using FileHandle abstraction to read from the file,

but it is a wrapper over C API, that adds overhead, and uses Data type, which might be not as efficient as we expect it

to be, since we actually just need an array of UInt8, which is returned by C API, so we can avoid needless conversions

back and forth.

So what we are going to do is replace FileHandle with fopen:

let file = fopen(path, "r")!

Then, read into a byte array:

let buffer = UnsafeMutableRawPointer.allocate(byteCount: chunkSize, alignment: MemoryLayout<UInt8>.alignment)

fread(buffer, 1, chunkSize, file)

let rawBytes = buffer.bindMemory(to: UInt8.self, capacity: chunkSize)

let byteArray = Array(UnsafeBufferPointer(start: rawBytes, count: chunkSize))

buffer.deallocate()

Since we aren’t using Data anywhere, Measurement has to change:

struct Measurement {

let name: ArraySlice<UInt8>

// ...

}

Use of ArraySlice allows us avoid copy of a memory each time, which is clearly saves us a lot.

With that changes taken into effect, the time has been further reduced to 10s. Implementation is here.

Running out of ideas

At this point I have almost gone out of improvements. A few tweaks has made runnig time to decrease to 9s:

- Change chunks agait to 2048 now, since we have faster processing, we can benefit from more chunks.

- Fix hashing for final result collection.

- Add inlining to some of the methods to be forced.

- Simplify temperature parsing.

Later, I was suspecting that hashing I was using is giving me collisions, so I’ve changed it to have FNV-1a algorithm implementation, which we discuss later. That haven’t made any performance improvements.

State of the code at this point can be found here.

File reading: one more time

If we take a look at the reading of the file, we can notice that it doesn’t benefit a lot from concurrency, we still have exclusive access and shared state in form of a pointer. That is a bottleneck in our reading part. We also open file for each chunk we are processing, because file handle cannot be passed safely between concurrently running code. On the other hand, there is a file descriptor, which is a safe alternative to use for faster concurrent access.

We are going to replace our file reading to use file descriptor:

- Create a single file descriptor, so we open file only once, then share it among readers.

- Use

preadAPI equivalent onFileDescriptorto read from file concurrently. - Limit number of readers to the number of cores.

Most of the changes aren’t hard to implement, yet we need to address fgetc we’ve been using before. Due to a stateful

behaviour, it have been advancing automatically for us. And now we are going to avoid modifying descriptor state. To

handle that, we create a replication of this function:

func getc() -> UInt8 {

let buffer = UnsafeMutableRawBufferPointer.allocate(byteCount: 1, alignment: MemoryLayout<UInt8>.alignment)

defer {

buffer.deallocate()

}

let bytesRead = try! fd.read(fromAbsoluteOffset: offset, into: buffer)

offset += bytesRead

return buffer.bindMemory(to: UInt8.self)[0]

}

And replace calls to fgetc() with getc().

Reading using descriptors given around 2s of improvement with the time down to ~7s to process 1 billion rows. With power off measurements in README this version sits by the link.

10K and capacity

The challenge has another more demanding dataset, where it is a 10k different stations instead of 413 in the default. It was interesting for me how well implementation will perform on this dataset, since I’ve made some assumtions on dictionary capacity. Without capacity modifications, it takes around twice more to process - 12 seconds.

At this point I have modified capacity to 11k (slightly more than set), and remembered quality of many of the containers -

they grow by doubling their underlying storage. So instead of setting to somewhat random number, I’d better use power of 2.

14 is the least power greater than 10k, so here we go: let capacity = 1 << 14. This little change has lead to a drasticall

improvement in running time reduced to 8.8s. We just sliced off 3 seconds just by using better capacity.

At this point I have already had an assumption that despite setting initial capacity for dictionary to 500, it isn’t enough. So I’ve tried to run 10k improvement on default dataset, and have got 0.5s improvement with running time dropped to 6.5s.

Final implementation: click here.

Ideas for further improvement

As for now, I have mostly gone out of ideas on how to improve it further. Reading is fast, the main bottlenecks are in parsing.

More likely, I we could avoid conversion to [UInt8] and use pointer we advance over, we would be able to reduce time, since there won’t be an overhead for array creation and access checks it performs on subscript. It happend to be more complex task that I thought and as for now it still an idea to check.

The second bottleneck is dictionary. Despite its implementation being effective, and we are using effective hashing paired with thoughful memory allocations, it is still costs more than array access, plus we have to create new structure each time. Hashing algorithm we are using are not producing collisions on our set of data, so we can modify it to act as array indexes and migrate from a dictionary to an array. I would expect it to give also huge time improvement, if these assumptions will work.

Temperature has constraints with min and max values from -99.9 to 99.9, plus only one fraction digit. We already do benefit from 1 fraction digit by setting this implicitly in code, but the range is still has a room for improvement.

Rest of possible improvements supposedly will include SIL generation analysis, looking at assembly code, use of SIMD, and so on. It would be interesting to dive into that some day, but as for now there are still options to try before that.

Final thoughts

It turned out to be complex, but not so much, task for me. 6.5 seconds on 1 billion rows seems to be a pretty good result. Such tasks make you learn some internals of the language you are not bump into very often if such performance is not your main concern.